We are leaving in a word where each new type of device appears every few minutes. IoT is still a buzz word, but not for long. Our world is so use to with IoT, devices and connectivity we cannot imagine our world without them.

Big players’ world

Enterprises started to look at IoT and how they can connect their assets to the internet for a while. Around us a lot of moving in this area. We see not only consumer devices, but also factories and other kind of assets that are starting to meet each other.

This transition is normal and companies can win a lot from it. Insights information about their systems, cost optimization and predictable maintenance are only some of the drivers of this trend.

Assets size

Inside a factory, it is normal to have a dashboard where you can see the state of all devices in real time. But how many devices and sensors a factory has, 10.000, 50.000, 250.000? Does a support team can manage a factory of this size, or than factories of this size?

If we connect 10 or 50 factories to a system that monitor this, does it make sense to monitor in real time all the devices? Can we react from a remote location if a robot brakes?

Of course, there are some actions that you can do remotely. Actions like stop the line, disable a device or reroute the load to another device is possible. But does it make sense to do this remotely? Does it make sense to send telemetry data to thousands of kilometers’ distance only to be able to process and react in real time?

Can we decide at factory level and take actions? Decisions can be taken at factory level by a team or by an automatically systems. Automations can be our best friend in this situation.

Predictable maintenance

Predictable maintenance is one of the most powerful things that shape IoT world. The amount of money that can be saved using predictable maintenance is enormous. Imagine that you predict and resolve an issue before appearing. The number of incidents can be drastically reduced. Not only this, you can group and schedule service activities without losing money with unplanned incidents.

We are not talking only about money. The quality of people would improve much better. Hospitals would not have medical instruments out of service or your car would never brake in the middle of nowhere.

Cloud and Microsoft Azure

It is a clear trend now that you shall send all your data to cloud or do a powerful datacenter. Once the information is there, you can start to process it and extract valuable data. It is pretty clear that it is more cheap to use an external provided of this kind of services – storing and processing, than developing in-house. It is not about that you cannot do this, but is more expensive and you lose your main focus. Many companies are not IT companies. If you have a business like producing robots, it is pretty clear that you are extraordinary in robots, but you might not have internal skills for big data or machine learning. It is not your main business, we shall let specialists to resolve this.

From this perspective, services providers, especially clouds ones), are a business changer. They facilitate us to be able to accomplish things that otherwise would be impossible.

Real Time

Real time information is very useful when you need the state of your devices and you want to active react to events that are happening at device level. In the context of real time there two things that are relevant:

What is real time for us?

Real time has a different meaning for each of us. For me real time might be at 1 hour atomicity. If I find out now or one hour later that a device from factory from Africa will be out-of-service for 30 minutes will not add me any extra value.

But for the people that work in the factory, they might need to know after 10 seconds’ atomicity that the device will be down for 30 minutes. The same things are happening with data that is normally displayed in reports. Companies tend to say that they need data updated in the reports or dashboard immediately. When you ask them how often they check of need this data, they will say one time per day. The price of implementing a system that has a dashboard that updates every 1 day or every 10 seconds’ can have a price factor or 10x or 50 time more.

What data do we need at different levels?

The current trend is to store all data that is produced by devices in one center location, where data can be processed, analyze and understood. This is great, the insight information that we get in this way is imaginable.

But I would like to challenge you. Do we need all the telemetry of devices at 0.1 second frame rate? For predictable maintenance systems, this might not be so relevant. Aggregate telemetry at 0.1 second, 1 second or 10 seconds might be the same for some systems. Of course, there are exceptions, but they are exceptions.

There is a big difference between is possible and we need it. The main driver shall be if we need it and at what frame rate.

Often, big companies that are now connecting their systems/devices to a central system try to store all the information in one central location at the frame rate produced by their systems/devices. With current technology and solutions, this is possible. But the price can be very high and they might not need it.

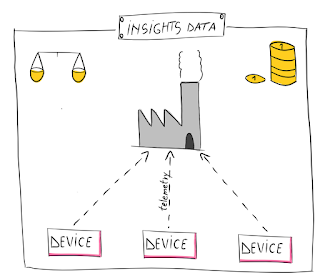

In the above diagram, we have a cluster of factories around the globe. Each factory has an on-premises system that monitor the health of the devices inside the factory. Telemetry data is collected at a frame rate of 0.1 second, processed and displayed on factory dashboards.

Device data is consolidated at 2 seconds’ frame rate and send to a central location where machine learning and predictable maintenance system are running. From cost perspective, it is not cheaper, but having data from all factories is more valuable to understand data and do better predictions.

From the same stream of data that is send every 2 seconds, another system consolidate data at a frame rate of 5 minutes and display data to a central dashboard. Reports are generated from this information only every 6 hours.

You could say that there are people outside the factory that needs to know if somethings go wrong. Yes, this is possible, why not send an alert, an email, an automatically phone call or SMS directly from the factory when things like this happens.

Conclusion

Let’s try to look behind trends and try to identify where and at what level we need telemetry data from devices. Having this in mind we might be able to get much better systems with a lowest price and with better SLA.

In a future post we will look on how we can create such a system using existing technologies.

Big players’ world

Enterprises started to look at IoT and how they can connect their assets to the internet for a while. Around us a lot of moving in this area. We see not only consumer devices, but also factories and other kind of assets that are starting to meet each other.

This transition is normal and companies can win a lot from it. Insights information about their systems, cost optimization and predictable maintenance are only some of the drivers of this trend.

Assets size

Inside a factory, it is normal to have a dashboard where you can see the state of all devices in real time. But how many devices and sensors a factory has, 10.000, 50.000, 250.000? Does a support team can manage a factory of this size, or than factories of this size?

If we connect 10 or 50 factories to a system that monitor this, does it make sense to monitor in real time all the devices? Can we react from a remote location if a robot brakes?

Of course, there are some actions that you can do remotely. Actions like stop the line, disable a device or reroute the load to another device is possible. But does it make sense to do this remotely? Does it make sense to send telemetry data to thousands of kilometers’ distance only to be able to process and react in real time?

Can we decide at factory level and take actions? Decisions can be taken at factory level by a team or by an automatically systems. Automations can be our best friend in this situation.

Predictable maintenance

Predictable maintenance is one of the most powerful things that shape IoT world. The amount of money that can be saved using predictable maintenance is enormous. Imagine that you predict and resolve an issue before appearing. The number of incidents can be drastically reduced. Not only this, you can group and schedule service activities without losing money with unplanned incidents.

We are not talking only about money. The quality of people would improve much better. Hospitals would not have medical instruments out of service or your car would never brake in the middle of nowhere.

Cloud and Microsoft Azure

It is a clear trend now that you shall send all your data to cloud or do a powerful datacenter. Once the information is there, you can start to process it and extract valuable data. It is pretty clear that it is more cheap to use an external provided of this kind of services – storing and processing, than developing in-house. It is not about that you cannot do this, but is more expensive and you lose your main focus. Many companies are not IT companies. If you have a business like producing robots, it is pretty clear that you are extraordinary in robots, but you might not have internal skills for big data or machine learning. It is not your main business, we shall let specialists to resolve this.

From this perspective, services providers, especially clouds ones), are a business changer. They facilitate us to be able to accomplish things that otherwise would be impossible.

Real Time

Real time information is very useful when you need the state of your devices and you want to active react to events that are happening at device level. In the context of real time there two things that are relevant:

- What is real time for us?

- What data do we need at different levels?

What is real time for us?

Real time has a different meaning for each of us. For me real time might be at 1 hour atomicity. If I find out now or one hour later that a device from factory from Africa will be out-of-service for 30 minutes will not add me any extra value.

But for the people that work in the factory, they might need to know after 10 seconds’ atomicity that the device will be down for 30 minutes. The same things are happening with data that is normally displayed in reports. Companies tend to say that they need data updated in the reports or dashboard immediately. When you ask them how often they check of need this data, they will say one time per day. The price of implementing a system that has a dashboard that updates every 1 day or every 10 seconds’ can have a price factor or 10x or 50 time more.

What data do we need at different levels?

The current trend is to store all data that is produced by devices in one center location, where data can be processed, analyze and understood. This is great, the insight information that we get in this way is imaginable.

But I would like to challenge you. Do we need all the telemetry of devices at 0.1 second frame rate? For predictable maintenance systems, this might not be so relevant. Aggregate telemetry at 0.1 second, 1 second or 10 seconds might be the same for some systems. Of course, there are exceptions, but they are exceptions.

There is a big difference between is possible and we need it. The main driver shall be if we need it and at what frame rate.

Often, big companies that are now connecting their systems/devices to a central system try to store all the information in one central location at the frame rate produced by their systems/devices. With current technology and solutions, this is possible. But the price can be very high and they might not need it.

In the above diagram, we have a cluster of factories around the globe. Each factory has an on-premises system that monitor the health of the devices inside the factory. Telemetry data is collected at a frame rate of 0.1 second, processed and displayed on factory dashboards.

Device data is consolidated at 2 seconds’ frame rate and send to a central location where machine learning and predictable maintenance system are running. From cost perspective, it is not cheaper, but having data from all factories is more valuable to understand data and do better predictions.

From the same stream of data that is send every 2 seconds, another system consolidate data at a frame rate of 5 minutes and display data to a central dashboard. Reports are generated from this information only every 6 hours.

You could say that there are people outside the factory that needs to know if somethings go wrong. Yes, this is possible, why not send an alert, an email, an automatically phone call or SMS directly from the factory when things like this happens.

Conclusion

Let’s try to look behind trends and try to identify where and at what level we need telemetry data from devices. Having this in mind we might be able to get much better systems with a lowest price and with better SLA.

In a future post we will look on how we can create such a system using existing technologies.

Comments

Post a Comment